Hardware Acceleration?

Could AI be the next big thing for GPGPU processing to tackle in games? There aren’t many gaming features that don’t have a hardware accelerator these days. A GPU can accelerate both 3D graphics and physics, while a decent sound card can accelerate advanced surround-sound effects. As AI is such a fundamental part of gameplay, shouldn’t we be accelerating that with hardware too?In 2005, an Israeli company called AIseek announced a dedicated AI processor called the Intia, which was designed to accelerate some AI features, including terrain analysis and path finding. However, we’ve yet to see any products based on the technology. The problem with this sort of dedicated hardware is that AI is such a fundamental part of gameplay that it can’t be optional.

As such, an AI processor would need to be a part of a standard PC.This is what makes AI ripe for the picking when it comes to GPGPU technology. Almost every PC gamer has a graphics card, and providing it’s compatible with Nvidia’s CUDA or AMD’s Stream technology (or a cross-platform GPU API such as OpenCL), it could be used to take some of the load from the CPU when it comes to repetitive AI processing.

AMD’s head of developer relations, Richard Huddy, explains that the most common AI tasks involve visibility queries and path finding queries. "Our recent research into AI suggests that it isn’t uncommon for gaming AI to spend more than 90 per cent of its time resolving these two simple questions," says Huddy. He adds that these two queries are "almost perfect for GPU implementation", since they "make excellent use of the GPU’s inherently parallel architecture and typically aren’t memory-bound".

Could the GPU soon be accelerating AI in games?

Nvidia agrees with this. Director of product management for PhysX, Nadeem Mohammad, explained that "the simple, complex operations" involved with pathfinding and collision detection "are all very repetitive, so pathfinding is one of the algorithms that works very well on CUDA". Mohammad adds that ray tracing via CUDA could play a useful part in AI when it comes to visibility queries. We aren’t talking about graphical ray tracing, but tracing a ray from a bot in order to work out what it can see. "You have to shoot rays from point A to point B to see if they hit anything," says Mohammad. "We do the same calibration in PhysX for operations such as collision detection.

"You can always imagine CUDA as loads of processors running the same program but not the same instruction, and ideally on the same data set but with different input parameters," adds Mohammad. "So, in the context of AI, the data set consists of the whole game world, and the parameters going into it are the individual bots – that’s one way of neatly parallelising the problem. If you look at it in that context then any AI program could be accelerated."

Both AMD and Nvidia claim to be working with several game developers and middleware developers in AI. According to Huddy, "some middleware providers are looking at this in terms of packaging a GPU AI library for games, while some developers are looking to transfer their own existing AI code from CPU to GPU".

Mohammad estimates that we’ll see GPGPU- accelerated AI soon. "I don’t expect it within a year," he says, "but definitely within 18 months." GPGPU-accelerated AI also appeals to game developers, who are constantly on the lookout for ways to make their systems intelligent without using up valuable CPU resources. "I think there’s a lot of potential for GPU acceleration to benefit AI," says Chris Jurney, Relic’s senior programmer on Dawn of War 2. "All our AI is grid-based, and we’re already using rasterisation to keep our maps up to date, and for line-draws on those maps to test for passability, so it’s a great match."

Crytek’s Markus Mohr also supports GPGPU-accelerated AI, saying that "with widely available parallel architectures, we have the opportunity to achieve new levels of quality and quantity". Mohr also notes that it "doesn’t make much sense to develop dedicated hardware for AI," such as the Intia chip. Creative Assembly’s Richard Bull agrees that "hardware advances that can offer processing power will allow us to think of more things that we can possibly do, certainly for planning or prediction". However, he also points out that the battle AI in Empire: Total War "peaks at around two percent of the logic usage" (see image, right).

Crytek’s Markus Mohr also supports GPGPU-accelerated AI, saying that "with widely available parallel architectures, we have the opportunity to achieve new levels of quality and quantity". Mohr also notes that it "doesn’t make much sense to develop dedicated hardware for AI," such as the Intia chip. Creative Assembly’s Richard Bull agrees that "hardware advances that can offer processing power will allow us to think of more things that we can possibly do, certainly for planning or prediction". However, he also points out that the battle AI in Empire: Total War "peaks at around two percent of the logic usage" (see image, right).This doesn’t mean that AI isn’t in need of advanced processing power; it just means that AI in its current form has limited resources available to it, and it has to compete with graphics for even a small share of processing power. Bull offers the example of an unbeatable chess computer that "gets to an end state by working out every possible move for every piece. A chess problem like that can, even now, with a lot of processors take hours, weeks or months to work out an optimal solution". Basically, there’s always room for more processing power for AI.

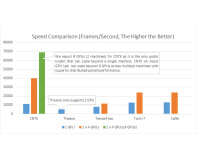

"What runs slowly on the CPU, maybe taking 25 percent of the CPU load, can run efficiently on the GPU, adding only one or two percent to the total GPU load," Richard Huddy points out. Even a small share of a GPU’s resources could boost AI processing power for some tasks, and it looks as though GPU companies and game developers are keen to see this working soon.

Not everyone is convinced that GPGPU is the best way of processing AI though. Bethesda’s Jean-Sylvere Simonet notes that "we might be able to take advantage of parallel architectures, but not for everything. You could probably speed up some individual parts of the decision process, such as replacing your AI search with a brute-force GPU approach, or running a pattern detection algorithm". However, Simonet also points out that "most AI processing is very sequential and usually requires a lot of data.

"For an NPC to decide on its next action, it will usually have to query the world for a tonne of information, and most of that information is conditional on a previous query result. For that reason, fewer processors that are more versatile, such as the SPEs in the PlayStation 3’s Cell chip, are ideal".

It’s also worth noting that both AMD and Nvidia are talking about utilising this in CUDA and Stream at the moment, which would make it hard for GPGPU-accelerated AI to become a standard in the gaming industry. "We rely on the fact that all CPUs in all PCs come up with the exact same result to make multiplayer work," points out Relic’s Chris Jurney. "We’re only transmitting the inputs and commands between players, so if two GPUs decide on slightly different results in the AI, different players will be playing in different worlds."

Perhaps OpenCL or DirectX 11’s Compute Shader could provide a way to accelerate AI on an even larger array of GPUs. Either way,

it looks as though AI could be a major advancement in GPGPU processing.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.